Agentic AI is moving software beyond simply providing answers. It now plans, uses tools, and takes actions to achieve goals with limited supervision. In enterprise settings, three main patterns stand out because they align well with real-world needs: task-specific agents that handle narrow workflows with speed and discipline, multi-agent systems that coordinate specialists for complex, cross-functional goals, and human-augmented agents that build trust by keeping people involved at key checkpoints for judgment and accountability.

Across all three domains, domain fit is decisive. What separates demo-grade systems from production-grade systems is the ability to integrate:

- Industry data, ensuring the agent works with the most relevant and contextual information.

- Validated tools, relying on tested and trusted resources rather than unverified ones.

Policy-aligned prompts and runtime guardrails, keeping outputs consistent with regulations and organizational standards.

Midway through, this article introduces InterspectAI’s practitioner perspective on vertical agents to illustrate how these patterns translate into dependable outcomes in regulated, high-stakes environments.

Task‑specific agents

Task-specific agents are narrow specialists designed to execute a single function or a tightly scoped workflow with consistency and speed. They wrap deterministic tools and rely on clear acceptance criteria, which makes them easier to measure and govern.

- Examples: Customer intent triage and retrieval-grounded answers, policy-aware refund checks, invoice or claim parsing with validation, and symptom capture with intake structured into Electronic Health Record (EHR) ready fields.

- Where this fits: Repetitive, rules-driven work with stable inputs and clear outputs. Goals center on latency, accuracy, and throughput at a single step.

- Industry implications: Retail and e-commerce improve first-contact resolution; BFSI (banking, financial services, and insurance) shortens KYC (Know Your Customer), invoice capture, and claims intake with auditability; healthcare standardizes intake and prior-authorization prechecks with traceability.

Multi‑agent systems

Multi-agent systems coordinate several specialists—planner, researcher, solver, and reviewer—under an orchestration layer to tackle complex, cross-functional objectives. The upside is adaptability and coverage; the trade‑off is coordination overhead and a stronger need for runtime guardrails.

- Examples: Supply chain control towers that combine forecasting, inventory, logistics, and exception handling; cloud operations copilots that align observability, auto-scaling, and cost control; research automation with roles for researcher, summarizer, and critic.

- Where this fits: Objectives that span multiple steps or domains, benefit from parallelism and role specialization, and require resilience when one path fails.

- Industry implications: Manufacturing reduces downtime by aligning planning, quality, and maintenance teams; logistics preserves Service Level Agreements (SLAs) through dynamic routing and carrier negotiation; capital markets separate alpha discovery from risk and compliance teams.

Human‑augmented agents

Human-augmented agents keep people in the loop at explicit checkpoints (plan review, action gating, or post-hoc verification), with explanations on record. This pattern prioritizes trust, accountability, and explainability over maximum autonomy.

- Examples: Clinical summarization and coding with clinician sign‑off before EHR commit; underwriting assistants that draft decisions and route exceptions for human approval; legal discovery copilots that propose categorizations with cited evidence for attorney review.

- Where this applies: Regulated, high-liability, or ethically sensitive workflows; ambiguous data or context where expert judgment is crucial; situations that require documented oversight.

- Industry implications: Healthcare improves documentation speed while preserving quality and fairness; insurance increases throughput without weakening compliance posture; public sector enhances accuracy for citizen services with auditability.

Choosing the right pattern

- Scope and complexity: Choose task-specific agents for narrow, stable workflows; multi-agent systems for cross-functional, multi-step goals; and human-augmented agents when oversight is mandatory or risk is high.

- Risk and governance: As autonomy and coordination increase, invest in runtime guardrails (allowlists, monitoring, reason codes, immutable logs) and add human checkpoints where potential harm or cost is significant.

- Operating model: Start with a narrow, high‑ROI agent; extend orchestration once data quality and governance are proven; layer human review into sensitive decisions to build durable trust.

InterspectAI, in context

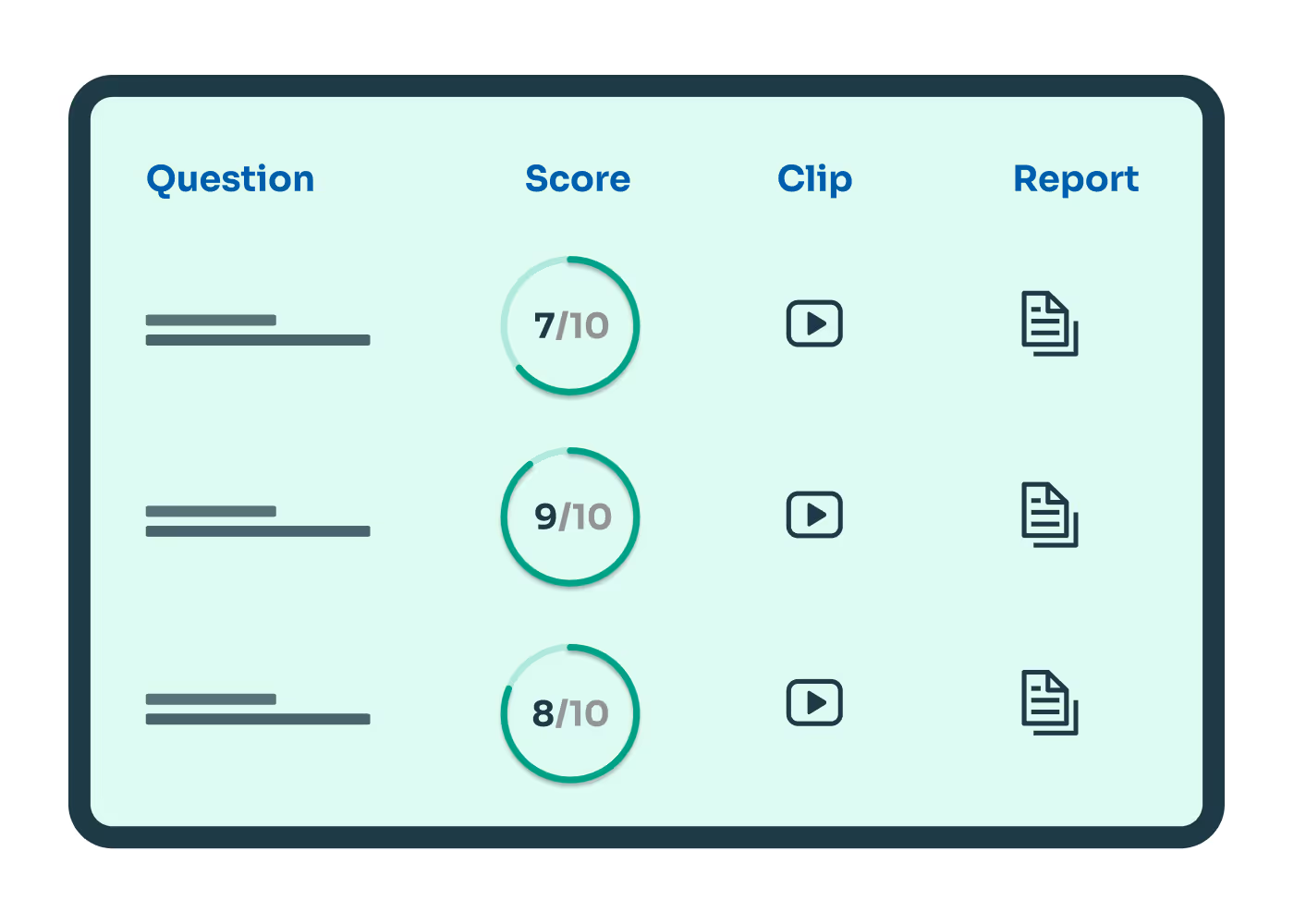

InterspectAI is a practitioner of vertical, domain‑specific agent deployments for interviewing, assessments, and other high‑stakes conversational workflows. The approach emphasizes:

- Verticalization: Tuning agents to industry data, tools, and policies so outputs match real‑world constraints and language.

- Guardrails by design: Encoding fairness, privacy, and safety principles into pre‑ and post‑decision checks, scoping tool access, and capturing explanations of records.

- Evidence and traceability: Replayable sessions, structured outputs, and audit‑ready logs that accelerate governance without slowing delivery.

These principles reflect the day-to-day realities in regulated environments such as healthcare, finance, and the public sector, where accuracy, transparency, and compliance must coexist with speed and efficiency.

Conclusion

Viewing agentic AI through three lenses (task-specific, multi-agent, and human-augmented) helps align architecture with reality: speed and consistency for narrow tasks, adaptability for cross-domain objectives, and trust where human judgment is essential. The standard multiplier is verticalization, which combines domain data, validated tools, and policy-aligned prompts with runtime guardrails and immutable evidence.

A pragmatic path is to start narrow, prove value and data quality, then scale orchestration while adding human checkpoints where the stakes rise. This is the discipline InterspectAI practices in high-stakes conversational workflows: design for the domain first, embed guardrails by default, and make decisions auditable. Done this way, agentic systems move beyond demos to dependable, industry-grade outcomes.

FAQs

1. What’s the core difference among the three categories?

Task-specific agents execute one narrow function; multi-agent systems coordinate specialists for multi-step goals; human-augmented agents add explicit human checkpoints for judgment and decision-making.

2. Do multi‑agent systems consistently outperform single agents?

No. They excel at complex objectives but add overhead due to orchestration; for simple tasks, single agents are faster and easier to manage.

3. When is human‑in‑the‑loop non‑negotiable?

In regulated, safety‑critical, or high‑liability workflows where documented human judgment and traceability are required.

4. What’s a pragmatic adoption path?Start with a high-impact, task-specific agent, add orchestration for adjacent steps once it's stable, and introduce human checkpoints for sensitive decisions.

Subscribe to The InterspectAI Blog

%201.svg)