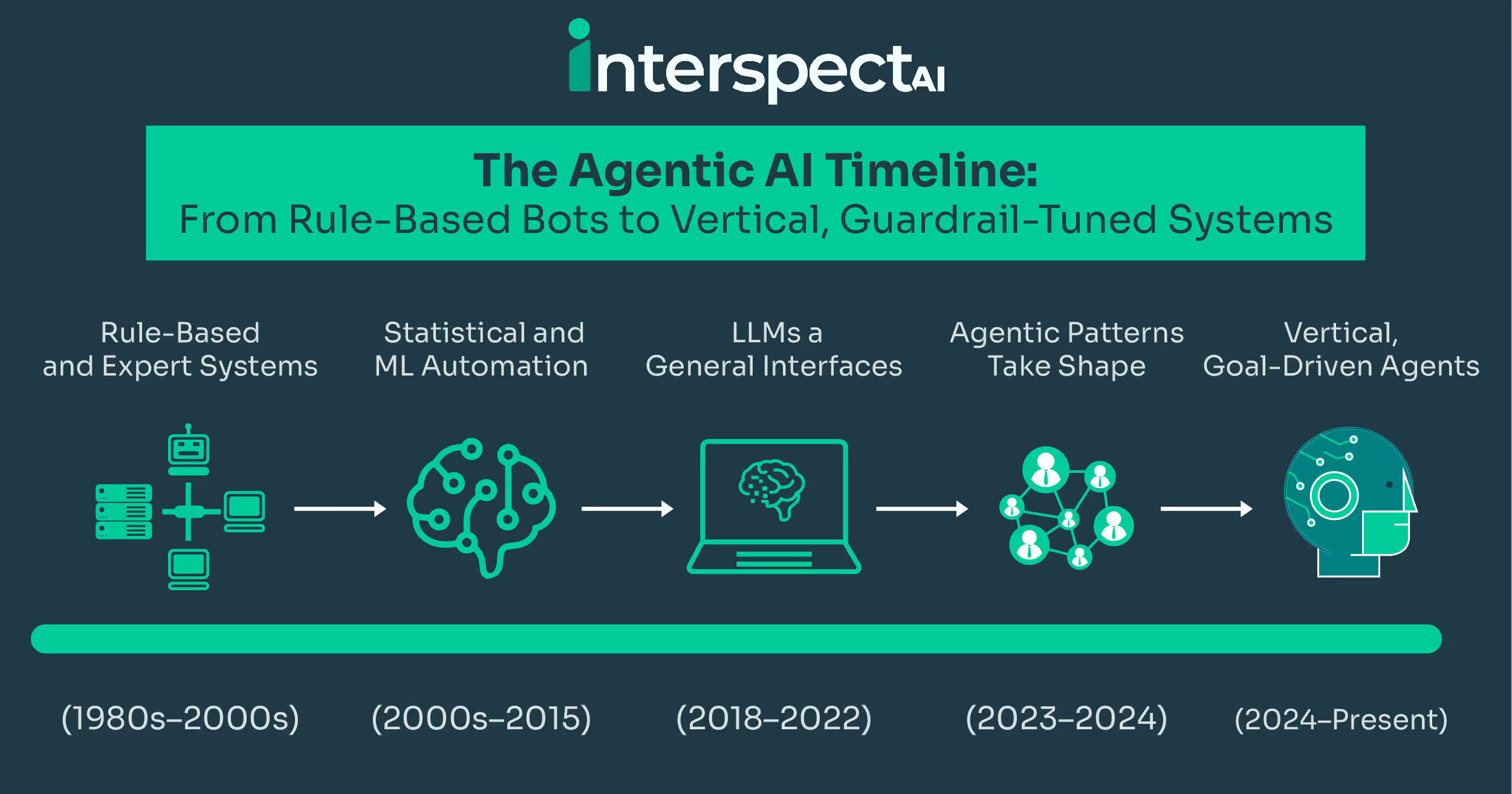

The Agentic AI Timeline: From Rule-Based Bots to Vertical, Guardrail-Tuned Systems

Software has steadily evolved from static programs into systems capable of reasoning, planning, and acting with autonomy. Agentic AI represents the latest stage in this progression—tools that integrate cognition, memory, and decision policies to achieve goals with minimal oversight.

The journey has not been linear. Each new stage of development—from rules-based systems to statistical models, large language models (LLMs), and now goal-driven agents—has built on the last, overcoming previous limitations while introducing new challenges. What began as simple automation has now matured into vertical agents that deliver industry-specific accuracy, reliability, and auditability. This evolution reflects not just advances in algorithms, but also the development of governance and guardrails that made autonomy viable at scale.

Early Days: Rule-Based and Expert Systems (1980s–2000s)

The first wave of AI relied on deterministic if-then rules and structured knowledge bases. These systems excelled at narrow, repeatable tasks such as medical diagnosis checklists or credit approval workflows. Their strengths were transparency and traceability—the underlying logic could explain every decision.

Yet they were brittle. Any deviation from predefined conditions led to failure, and adapting them to new contexts required costly re-engineering. The limitations of rigidity set the stage for the next chapter in AI’s evolution.

Statistical and ML Automation (2000s–2015)

The introduction of supervised learning models marked a shift from handcrafted rules to data-driven decision-making. Algorithms for classification, extraction, and scoring automated tasks like spam detection, fraud monitoring, and document tagging with greater accuracy and efficiency.

Despite their advances, these models were largely single-step: they could answer a question or label an input, but they could not plan, reason, and retain memory. They accelerated throughput but remained task-bound, unable to operate as independent decision-makers.

LLMs as General Interfaces (2018–2022)

The arrival of pre-trained transformers, such as GPT, unlocked robust natural language understanding and generation. Suddenly, software could converse fluidly, interpret context, and generalize across domains. LLMs have become universal interfaces that lower barriers to interacting with complex systems.

Still, these models were reactive by default. They excelled at producing coherent responses but struggled with long-horizon reasoning, multi-step tasks, or acting reliably in dynamic environments. The leap from conversation to agency required additional scaffolding.

Agentic Patterns Take Shape (2023–2024)

Researchers and practitioners have begun extending LLMs with agentic components, including planners to decompose goals, scratchpads for reasoning, retrieval mechanisms for context, and orchestrators to coordinate roles. Agents can now utilize tools and APIs, recall past interactions, and refine their own outputs.

This introduced new risks. As systems gained autonomy, questions of safety, accountability, and oversight became critical. Guardrails—ranging from allowlists and policy filters to monitoring and audit trails—emerged as necessary infrastructure. The goal was clear: harness the creativity of LLMs while constraining them within reliable, transparent boundaries.

Vertical, Goal-Driven Agents (2024–Present)

The current stage of evolution emphasizes verticalization, building agents that are tuned to specific industries, data schemas, and decision-making policies. A repeatable blueprint has emerged: an LLM-based cognition core enhanced with domain-specific cognitive skills, validated tools, memory, and governance mechanisms.

Vertical agents stand apart because they deliver accuracy and trust in real-world workflows. In fields such as healthcare, finance, and customer service, they combine domain-specific heuristics with runtime guardrails to ensure that outputs are not only correct but also compliant and auditable. Autonomy became production-ready when cognitive breadth met governance depth.

Today’s Agentic Stack at a Glance

- Cognition and planning: Decomposing tasks, reasoning across steps, and tracking progress.

- Cognitive skills: Domain-packaged functions such as underwriting heuristics or clinical abstractions.

- Tools and data plane: Retrieval systems, enterprise APIs, and validation layers for factual grounding.

- Memory: Short-term scratchpads and long-term profiles that sustain continuity.

- Guardrails: Policy filters, allowlists, monitoring, and explanations of record to enforce governance and ensure compliance.

Each layer represents both a technical milestone and an evolutionary response to earlier shortcomings.

Practitioner Lens: InterspectAI

In practice, this evolution is visible in how organizations approach high-stakes, conversational workflows. InterspectAI, for instance, applies the vertical agent blueprint to contexts where fairness, accuracy, and auditability cannot be compromised.

Its approach reflects three principles:

- Domain first: Agents are aligned with industry-specific data, schemas, and decision-making policies, thereby increasing accuracy and trust.

- Guardrails by design: Safety, privacy, and fairness are embedded as runtime checks, scoped tool access, and transparent decision logs.

- Evidence as a feature: Every interaction can be replayed, audited, and improved through immutable records and structured outputs.

Rather than treating these as add-ons, they need to be treated as core design elements. This mirrors the broader shift in the field: autonomy succeeds not only through more capable models but also through architectures that embed governance into every decision cycle.

Looking Ahead

The story of agentic AI is one of expanding horizons matched by increasing responsibility. Rule-based systems provided control, statistical models brought accuracy, LLMs unlocked universal interfaces, and agentic scaffolding added planning and memory. Verticalization fused these advances with guardrails to create dependable decision-makers fit for regulated industries.

The following steps are pragmatic: start with high-impact but bounded use cases, invest in data quality and validated tools, extend cognition where it creates real value, and ensure every layer—from planning to memory to tool use—is aligned with governance. Agentic AI’s evolution demonstrates that autonomy and accountability are not trade-offs, but somewhat parallel requirements. Together, they define the path from simple automation to systems that act with purpose and reliability.

FAQs

What distinguishes agentic systems from chatbots?

Agents integrate planning, memory, and tools to pursue goals, while chatbots primarily converse without autonomous action.

Why are vertical agents outperforming generic ones?

By aligning with industry data, validated tools, and policy-aware prompts, vertical agents achieve higher accuracy, safety, and adoption.

Are multi-agent systems always better than single agents?

Not necessarily. Multi-agent setups excel at complex, cross-functional objectives but introduce coordination overhead. Single agents remain optimal for narrow, stable tasks.

What guardrails are essential for production?

Core mechanisms include action allowlists, policy filters, input/output monitoring, reason codes, immutable logs, and human checkpoints for sensitive steps.

Subscribe to The InterspectAI Blog

%201.svg)